Using the DomainTools Feed API in Splunk

Share this entry

Security teams rely on timely, accurate domain intelligence to discover and respond to threats before they cause damage. Historically, our threat intelligence feeds were delivered daily via SFTP. While this method provides valuable data, an increase in intraday adversarial attacks has created a greater demand for more frequent delivery.

DomainTools is now introducing a real-time Feed API access method, enabling customers to query domain intelligence as frequently as every 60 seconds. This upgrade means faster detection, more flexible integration, and the ability to adapt to fast-moving threat landscapes. Whether your security team is investigating a phishing campaign, monitoring suspicious infrastructure, or powering automated defenses, this new API helps ensure you have the freshest data when and where you need it most.

Key Improvements

- Near-real time: Retrieve new data as often as every 60 seconds to shrink attacker opportunity.

- Programmatic control: Integrate data directly into security tools and workflows without having to manage file downloads.

- Exactly-once guarantees: Secure, reliable data delivery, as our sessionID parameter and integrated session management ensure no data duplication or loss.

- Server-side filtering: Leverage various parameters to retrieve a specific subset of data, for example, by filtering for domains containing specific substrings.

A Real-World Use Case: Integrating with Splunk SIEM

To make domain intelligence more accessible to security teams, we’ve designed our new Feed API to allow turnkey integration into most SIEMs, TIPs, and SOARs, without requiring an app. DomainTools offers a variety of data feeds mainly focused on newly discovered domains and those predicted to be high-risk. Examples from these categories include the Domain Discovery Feed and the Domain Hotlist Feed.

In this use case, I’ll demonstrate how to use the new Feed API to ingest the Newly Observed Domains (NOD) feed directly into Splunk SIEM – even if you don’t have Splunk ES. Expect setting up the ingestion to take around an hour with appropriate permissions and prerequisites. By the end of this blog post, we’ll have a real-time stream of young domains continuously ingested in Splunk, and discuss a handful of use cases on how to implement the data for proactive blocking, threat hunting, and detection.

Step 1: Getting Started with the Feed API in Splunk

Prerequisites:

- Access to DomainTools Real-Time Threat Feeds

- DomainTools API Credential

- Access to Splunk with permission to create index and report

- Webtool Add-on (Curl command)

- There are a few other add-ons similar to this command (“curl“), but for this guide, we will be using the “Webtool Add-on”.

Step 2: Ingesting Domain Intelligence Data

- Login to Splunk

- Create a new index.

- Settings > Indexes > New Index

- Input a value for the name of the new index

- For this example, you’ll only need the new index name. The rest we will keep default settings.

- Example: nod_apex_domains

- Click “Save”

- Go to the “Search and Reporting” app within Splunk

- Paste the following SPL query into the search input

SQL

| makeresults

| eval header="{\"X-Api-Key\":\"YOUR_API_KEY\"}"

| curl method=get uri="https://api.domaintools.com/v1/feed/nod/?sessionID=YOUR_SESSION_ID" headerfield=header

| eval _time=now()

| makemv tokenizer="([^\r\n]+)(\r\n)?" curl_message

| mvexpand curl_message

| spath input=curl_message

| table _time, timestamp, domain

| eval domain_lower=lower(domain)

| eval parts=split(domain_lower, ".")

| eval apex_domain = mvindex(parts, 0)

| table _time, timestamp, domain, apex_domain

| collect index=nod_apex_domains- Let’s review some of the syntax:

- In “| eval header”, replace YOUR_API_KEY with your DomainTools API key.

- The “| curl” command will retrieve the data for the given session ID for the NOD feed.

- An important field is the “sessionID”: a string that serves as a unique identifier for the session. This allows your ingestion process to resume data retrieval from the last delivered data point, so replace YOUR_SESSION_ID with a string that uniquely identifies your ingestion job (e.g., COMPANYNAME_SPLUNK).

- Regarding parts=split(), I choose to strip out the second-level label from the domain to be better able to compare keywords (like brand names) to the labels.

- Regarding “| collect”, this is to add the results of a search to a summary index. The index name should match what was created earlier (nod_apex_domains in this example).

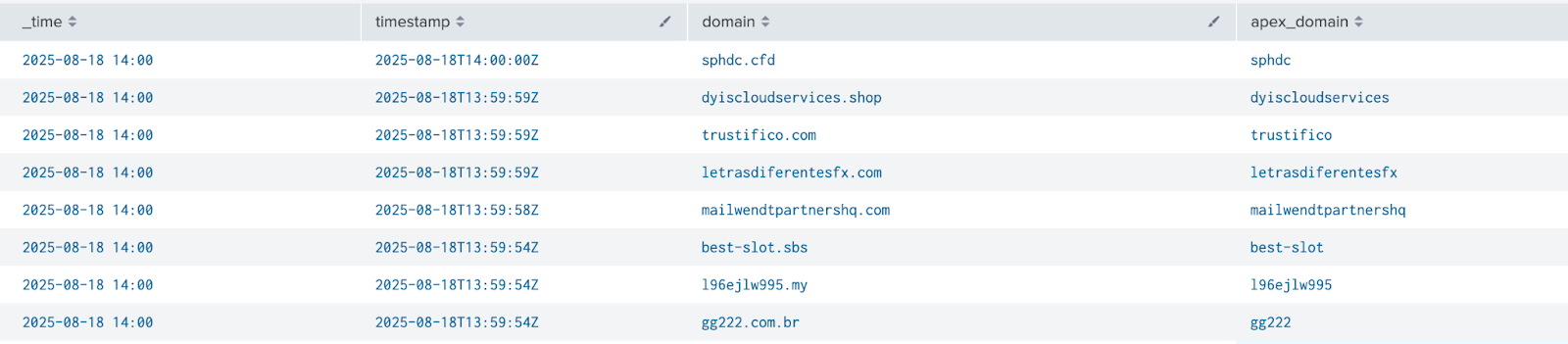

- Run the search and you should see a similar output to below:

- In order to schedule the search to run regularly you’ll need to first save it as a report

- Click on “Save As” and select “Report”

- Give it a name and description. You can select “No” for “Time Range Picker”

- Click “Save”

- A pop up screen will appear after saving the report. Click “Schedule” to edit the schedule for the search.

- Select “Schedule Report”

- Choose a relative schedule or be more specific with a Cron Schedule. The API can be queried as often as every minute.

- Click “Save”

- Now, when you visit “Reports” you will see your newly created report

- If you go to “Search” again and run the SPL below with the name of your index, you’ll be able to view the contents.

SQL

index="nod_apex_domains"

| table _time, timestamp, domain, apex_domainAccomplishing Step 2 will ingest NOD data into an index within Splunk at a regular interval. Once the data is being ingested, there are various use cases one might utilize to derive value.

Step 3: Your Security Organization on Newly Observed Domains

There are multiple ways security organizations use Newly Observed Domains that are worthwhile to consider.

Possible Use Cases:

- Proactive Threat Blocking

- Hunting for new attacker infrastructure

- Discovering typosquatting and brand impersonation

- Discovering new domains created with domain generation algorithms

- Correlation with internal logs

- Behavioral analysis

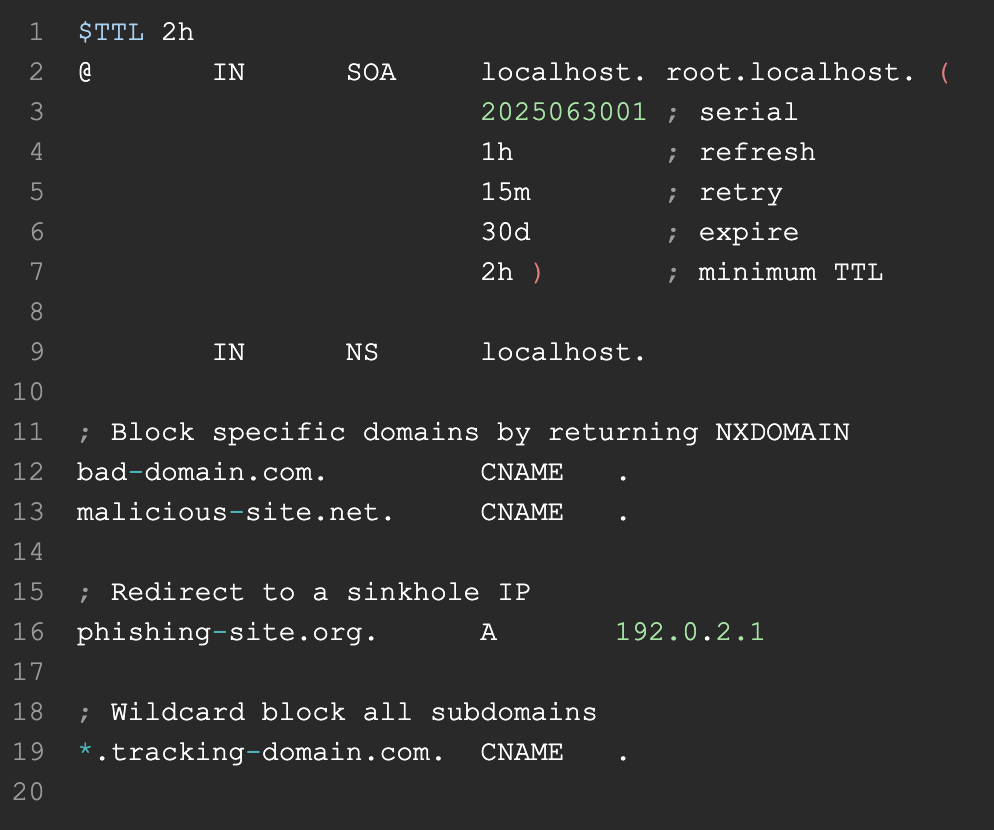

Proactive Threat Blocking

You may be thinking, any domain that is 24 hours old or younger is not worth exposing to my internal network or customer base. This is a view shared by many in the information security industry and exactly why they will use the NOD feed. With the NOD feed, blocking young domains becomes much easier.

The decision then becomes where to block. While our customers typically use several points on their perimeter for blocking, we recommend blocking at the DNS layer as a fundamental part of your defense strategy. Using a Response Policy Zone (RPZ) to sinkhole newly observed domains to a defender-controlled IP is often the most effective method

Hunting for new attacker infrastructure

If a large pile of young domains sounds like fertile grounds for a threat hunt, you aren’t alone. It’s a prime place to look for new adversaries targeting your industry or those you depend on. By filtering the list for naming schemes or enriching it with contextual data, a hunter can find patterns tied to a specific threat actor.

For example, I looked at domains from the NOD list where the term “support” was present in the domain name. The domain csupport[.]top was a hit and shares a Netherlands-based IP address with csonlinesupport[.]com. Both domains have a high domain risk score. The Internet Service Provider (ISP) associated with the IP address also has a poor reputation. Not to mention the screenshot captured shows a remote desktop software application login page.

Observing shared infrastructure with a poor reputation, a similar naming scheme, and suspicious web pages all serve as critical intelligence that a threat intelligence team can use to better protect their organization and expand its knowledge of a threat actor’s tactics.

Discovering typosquatting and brand impersonation

One of the most common use cases for ingestion of newly observed domains is for brand protection and identifying typosquatting. Within an SPL query there is an ability to evaluate the Levenshtein Distance between two terms. This is typically one of several methods teams will use to find domains typosquatting their brands or key terms.

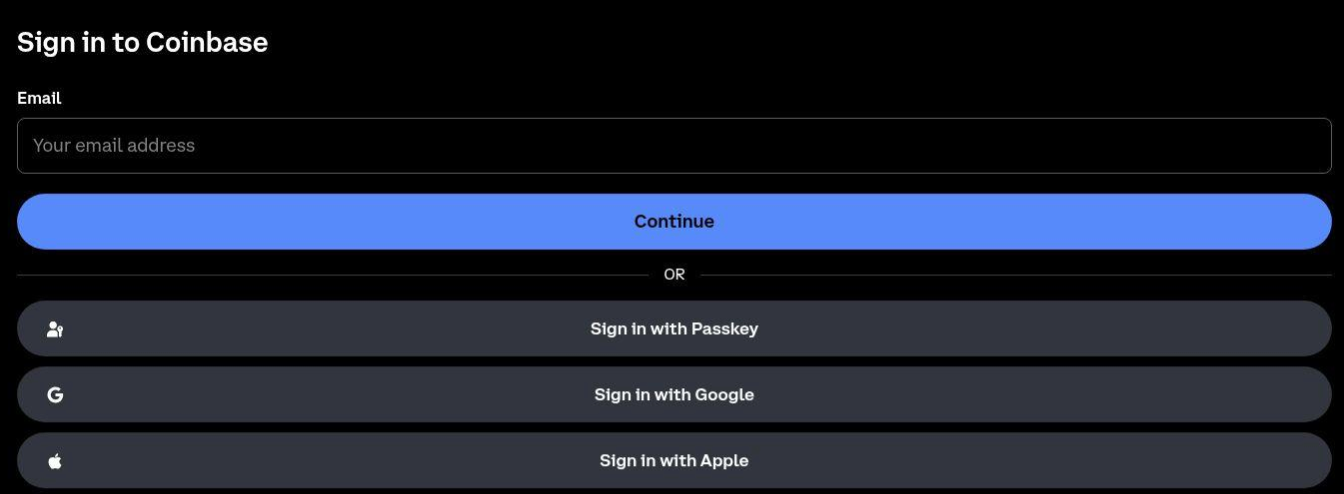

By filtering for domains with a Levenshtein Distance of 2 or less from ‘Coinbase’, I discovered the suspicious domain coinbasee[.]help. Its registration footprint immediately stood out with several key differences from the legitimate Coinbase site, including the use of a different registrar, name servers, and internet service provider. The choice of a less common top-level domain like .help and its high Domain Risk Score—a metric predicting malicious intent—further increased my suspicion.

The domain also had a suspicious sign-in page signaling the potential to be used for credential harvesting.

Being able to gather new domains as soon as they are created, filtering them to those potentially spoofing a brand, and triaging them quickly to protect against domains like this significantly reduces a security organization’s risk exposure and improves the ability to stay ahead of emerging threats.

Discovering new domains created with domain generation algorithms

Domains created by domain generation algorithms (DGAs) are often associated with maliciousness. Discovering multiple domains created by a DGA can help in identifying a pattern, which can be used to find others created by the same DGA.

If there is an observable pattern, using a regular expression to find domains matching the pattern is a common practice. Using a regular expression with the NOD feed is an excellent way to identify domains created by a DGA quickly.

For example, while looking at domains related to the term coinbase I found domains like this one, 0218652-coinbase[.]com, where there was a random series of 7 numbers followed by a “-”, and then the term “coinbase”. I was able to craft a regular expression to filter the NOD list for domains matching this pattern and over the course of seven days there were seven domains matching this pattern.

2288544-coinbase[.]com

6929481-coinbase[.]com

2188194-coinbase[.]com

1994485-coinbase[.]com

5992932-coinbase[.]com

4991496-coinbase[.]com

4828581-coinbase[.]com

The domains above all have a similar registration footprint. They all share a registrar that is based in Vietnam and a low-cost option. All domains use Cloudflare for hosting services. The group of domains have an average Domain Risk Score of 93. The last domain in the list showcases a high number of subdomains such as “api”, “app”, “portal”, “admin” and has been labeled potentially malicious by VirusTotal and URLscan. The other domains in the list have not been labeled as such by the aforementioned websites.

Monitoring for DGA-created domains is a critical practice for protecting an organization’s brand, network, and platform. The ability to identify these domains and their associated malicious patterns is a proactive measure that disrupts threat actors’ activities and provides crucial visibility into their operations before they can target a network, turning a reactive defense into a proactive one.

Correlation with internal logs

For folks using Splunk Enterprise Security (ES), there are built-in features that allow you to create notable events when there is a match from your internal logs with a threat feed like NOD. Once ingested, the platform’s pre-built correlation searches will automatically check your internal logs for matches against the threat feed, generating a notable event when a match is found. This allows security organizations to monitor for the presence of these young domains leading to actionable insights as well as protection. For ES customers, check out this technical guide on ingesting Feed data as a new threat intelligence source.

Conclusion

The new DomainTools Feed API transforms how security teams access and act on domain intelligence. By moving beyond the limitations of daily file transfers and improving flexibility, this API provides the near-real-time visibility needed to keep pace with modern threat actors.

As demonstrated through this Splunk integration, actionable intelligence is no longer a luxury but an operational necessity. The ability to ingest, filter, and correlate newly observed domains in near-real-time empowers security professionals to reduce risk exposure, stay ahead of emerging threats, and give threat actors more bad days. The speed, flexibility, and automation offered by this new API is a critical step in turning a reactive defense into a proactive one.