Magical Thinking in Internet Security

Introduction

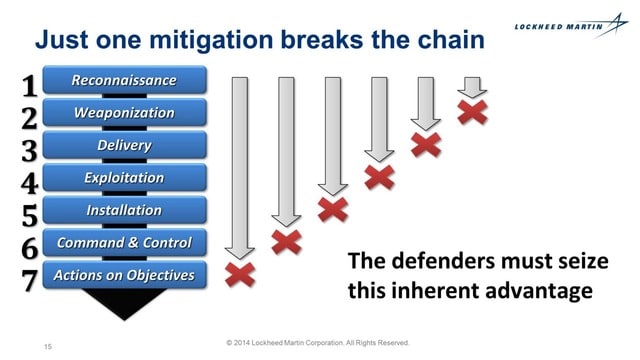

Today I’d like to talk about complexity, applying some of the thinking I shared a few years ago about DNS to the problems and solutions in the Internet security field. I am inspired here by both the Lockheed-Martin “kill chain” model:

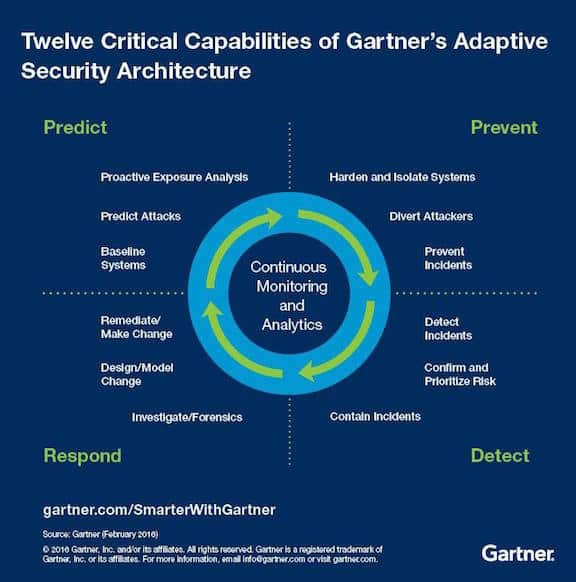

…and also by the Gartner “12-step” model:

These models each attempt to taxonomize a problem/solution space, which could help defenders understand their vulnerabilities from an attacker’s point of view, which could in turn help defenders prioritize their limited resources and also measure the scope of their proposed solutions against the scope of an actual problem. And that sounds pretty good, because the Internet security industry is egregiously understaffed, underfunded, and outgunned.

However, these models have their roots in military doctrine, and if divorced from those roots, can yield a dangerously false sense of direction. In the original military doctrine, these models inform strategic decisions not tactical ones — like, where should we position our forces in peace time; how shall we allocate military spending; should we confront here or there, now or later; will we have enough advantage against the predicted capabilities of our adversary; and so on. The amount of research required to make these models accurate and useful is enormous, as America learned to its peril when it tried to apply WWII wisdom against insurgents and guerrillas in South East Asia, South Central Asia, and now the Levant. Simply put, your adversary does not have to behave according to your chosen model, and once they know your model, they will avoid behaving that way quite deliberately.

A Failure of Understanding

Most of us expect Internet security spending to increase from $70B to $140B by the end of this decade. But even at $70B, our spending is in the same order of magnitude as our losses. If we could find reliable negotiating partners, it could almost be cheaper to pay protection money to the criminals than to build the necessary defense works. Note that I would not propose to pay protection money — Winston Churchill said “billions for defense; not one penny in tribute”, and I feel that quite strongly. But I’d like to aim for some hopeful future in which our defense was not as large a drain on our economy as the attacks we’re trying to prevent. To get there, we will need to understand where we are, how we got here, and why $70B per year of spending isn’t making much difference in our outcome.

We are, today, trying to secure technology we do not understand, against attackers who understand our technology better than we do. Worse still, we’re trying to secure technology that our technology vendors do not understand. The total distributed system of the Internet now has enough moving parts (“state variables” and “state transitions”) coming from enough eras and enough players that any claim of even substantial understanding of that totality is hogwash.

Yet what we’re spending money on is, generally speaking, more technology. We are adding complexity, as our reflexive response to having too much complexity. That’s not sane, and could only happen through the madness of crowds — no one person would make a decision lacking as much sanity as this one does. It’s something we can only do together, as a complete economy. And it’s got to stop if we want to stop spending so much without changing out outcome.

Complexity

Understanding can be expressed as a simple fraction: (N / M), where M represents the total number of state variables and state transitions in the part of the Internet distributed system that we have control over or that has influence over us; N represents the portion of M that we know about and that we mostly understand and that we have reasonable confidence about. When we add more technology to our own networks, we absolutely always increase M, and we sometimes increase N, if we’re extremely well trained in the new technology, and that technology is “open source”, and if we have a strong corporate ethos about making the right set of long term staff and training investments so that the new technology does not transform into a black box for our cargo-culting successors’ successors’ successors.

So, best case, we make not only a new security technology investment but also the corresponding corporate “security technology DNA” investment, so that the fraction (N / M) becomes (N + X / M + X). For example, if N was 5 and M was 10 and X is 2, you move from (5 / 10) to (7 / 12), or from 0.500 to 0.583, a net gain of 0.083 in our level of understanding of the system we’re depending on or protecting. As you know, this is an oversimple model, and the vulnerabilities in our technology (both existing and incoming) also drive our risk level. But assuming as we must that all technology has bugs, and that the bad guys will find those bugs before the good guys do, and that new technology adds new ways to create bugs at about the same speed that it subtracts old ways to create bugs, we can reason that the risks of increased complexity from new technology including security technology can be managed only by investments that keep our understanding level from falling, or ideally, help our understanding level to rise.

That’s not happening. I know it’s not happening because many prospective customers of Farsight Security are asking me for holes whereas I’m trying to sell them shovels. They often don’t have the staff time or total skills necessary to do their own in-house integration and tool development, and they don’t have the budget necessary to attract, train, manage, and then retain the kind of people who can work at that level. They want safety, they want management for their risks, they have budget, and they need black boxes. We are working on giving our products and services broader appeal and reducing the need for expert consumers, but we know there’s only so far we can push that arc before we’ll be selling things our customers don’t understand, to help them protect the other things they don’t understand, which means trading a little temporary safety for a far worse long term outcome.

That would be magical thinking, and also, partly insane.

Conclusion

Increased complexity without corresponding increases in understanding would be a net loss to a buyer. At scale, it’s been a net loss to the world economy.

What’s missing from the models inspired by military doctrine is that this isn’t a war or a battle, it’s a way of life — it’s forever. And our strategic options don’t include whether to fight, or when, or on what ground. All of those options are in the hands of our adversaries.

The TCO of new technology products and services, including security-related products and services, should be fudge-factored by at least 3X to account for the cost of reduced understanding. That extra 2X is a source of new spending: on training, on auditing, on staff growth and retention, on in-house integration.

A defender may get more advantage from turning off old unpatchable systems than by buying a new firewall. Almost any decrease in complexity will improve the local understanding level.

We’ve all got to stop trafficking, as either buyers or sellers, in black boxes and the magical thinking that goes with them.

Dr. Paul Vixie is the CEO of Farsight Security, Inc.